IRL to VR

Scanning a room with ARKit (LiDAR!) to be viewed in an VR App

I have been very pleased with the Meta Quest 2 device (Beat Saber!) and in particular finding it cool when when family was not able to get together. As a family who games we occasionally jumped into a VR game when spread across a few cities. It is _way_ better than yet another zoom call, and very immersive as you could identify people from their avatar by subtle cues like height — or my partner who emphasizes her comments with her hands :-) . At the same time I have been peripherally interested in 3D scanning and sensors from back in the Kinect era and following along with announcements about ARKit scanning functions from Apple. This set me up for a project that all came together on a break around New Years as I got a new iPhone that had a LiDAR sensor:

The side project was to try to use the Room Scanner sample to grab a scan of a room and then put the model into an adapted Unity VR Sample app to walk through the space. Along the way my goal was to play with different parts of the technology: Swift, ARKit, 3D Models, Unity, Quest/XR APIs. I am in the early part of the learning curve for most of these technologies, so your milage may vary.

Bottom Line Demo

I was able to successfully scan a meeting room called “Paradiso” in our office, and get a sense of the state of some of the technologies along the way. Check out the walk through:

Method

I set up a very minimal process for the spike basically manual processing the data between the iOS scanning sample and the Meta VR Sample for Locomotion

Observations

Observation 1: U in USDZ is not yet Universal

While I do not work day to day in Unity or other 3D systems I have used these systems in a couple of side projects. I am very often struck with the power and the important and potent mental shift between modelling vs recording or animating or other visual layouts but I am also struck that it feels like a tech industry that has grown up very organically and with high stakes proprietary tech. File formats are named after different companies, and there seems to be a lot of options, and each of them seem to cover only part of the story — a great format for structure and articulation stores textures separately; or there is little standardization or scaffolding for interactions (menus end up being arbitrary planes drawn in a scene rather a semantic abstraction). I also personally really feel the separate mental models in play between the technology and the visual design origins in this space where I am reaching for a code or config file metaphors that are intstead composed by visual attachment of properties to assets themselves — powerful but another duality.

So I was encouraged by the description and concept behind Universal Scene Description (.USD, .USDZ) files, focused on supporting all the elements things, and scaling to large collaborative groups (originally created and released by Pixar). It is a very cool format embedding a very composable structure of the data, but I ended up spending a disproportionate amount of time trying to get the scan results into Unity where the “Universal” format does not seem supported. There was up until a 2020 LTS release a pre-release unity package to import USD but this no longer seems present on the latest few releases. I did find an older (abandoned utility) but it didn’t have prebuilt packages for MacOS. I also started down the path of building the unity package for importing assets from source, but ran into dependency challenges and stopped pulling on that thread and went with a manual one time conversion in a tool.

As you might expect each vendor produces free tools that read many competing formats but primarily export in their preferred format. For example Reality Converter from Apple really only exports in USDZ. Ultimately I looked at Unity standard/preferred formats and was able to use DAE by just opening the USDZ file in XCode and exporting. It seems Unity would prefer FBX files but I did not identify a one step conversion to that format.

Next steps in the spike in this regard would be to explore parsing and manipulation libraries available here and determine which formats would make sense for further processing (applying textures, joining room scans, incorporating into the viewer dynamically).

Observation 2: ARKit room scan seems sophisticated

In a distant past project I had worked with an algorithm that used stereoscopic images to detect edges and distance and it kicked out a bunch of planes and lines. I had expected the room scanner to roughly do that but add LiDAR and get some good effects with sensor fusion type concepts. Instead I was surprised that it really effectively simplified the room, extrapolated architecture past occlusions really well, and inferred and modelled objects really well; and does so in real time with visual prompts.

Aside: Room scanning is RoomCaptureView; a drop in component. The sample app really just provides a delegate to receive and store the data and glue some pieces together, so screen shots and features below are basically built in under the covers. It is really a UIView component and not SwiftUI and I spent some time trying to model this in Swift using a really nicely designed bridge component called UIViewRepresentable and I found a good walkthrough for UIViewRepresentable. SwiftUI is effectively a declarative rendering system (think React) and there are procedural calls in the room scanning component. The demo just used the vanilla room scanning app built and loaded onto my phone.

As you can see here the room has an exposed infrastructure in the ceiling, so the top edge of the wall is only occasionally visible. When it is visible the app clear extrapolates the line and knows how to show the line as hidden behind items in real time. The overall room sketch shown in white while scanning is also oriented and rendered during the scan.

You may also notice the edge doesn’t line up perfectly with the edge of the window here and seems to come in from the wall. This is an example of a simplification of the room done by the scanner that I think is useful but doesn’t fully match reality here: the windows are set well back from the wall along a window sill, but do go fully to the ceiling. In this case the wall is deemed to be on the plane from the bottom window sill that comes into the room. This reflects the real floor space and achieves a simplified and manageable model — but certainly loses something about how open and wide the room feels.

Also surprising was the degree of modelling done to the room. The result was not raw planes and lines, but something much more amenable to post processing that identified the walls, windows, and doors separately, as well as the objects in the room such as chairs, table and television. All while skipping the gear on the table or the backpack by the wall or the duct work on the ceiling.

This modelling sets the output up really well to be imported and manipulated manually in a tool like Sketch Up for IRL designers of spaces. It also results in a surprisingly tight model size of 55 Kb. And lastly, it sets up for possible next steps in this spike where images could capture a texture for the components creating a very efficient and still realistic model of a space to experience virtually.

One area that did not perform as smoothly as desired was in capturing a structural post that extended out from the wall, both the top and bottom of the post was hard to scan with the items in the way, but even after a couple of attempts the scan did not successfully capture the coarse structure of the concrete pillar and instead did identify the edges but did not build a volume out of it — in contrast the triangular edged pillar seemed to be captured more successfully.

Observation 3: LiDAR is not magical

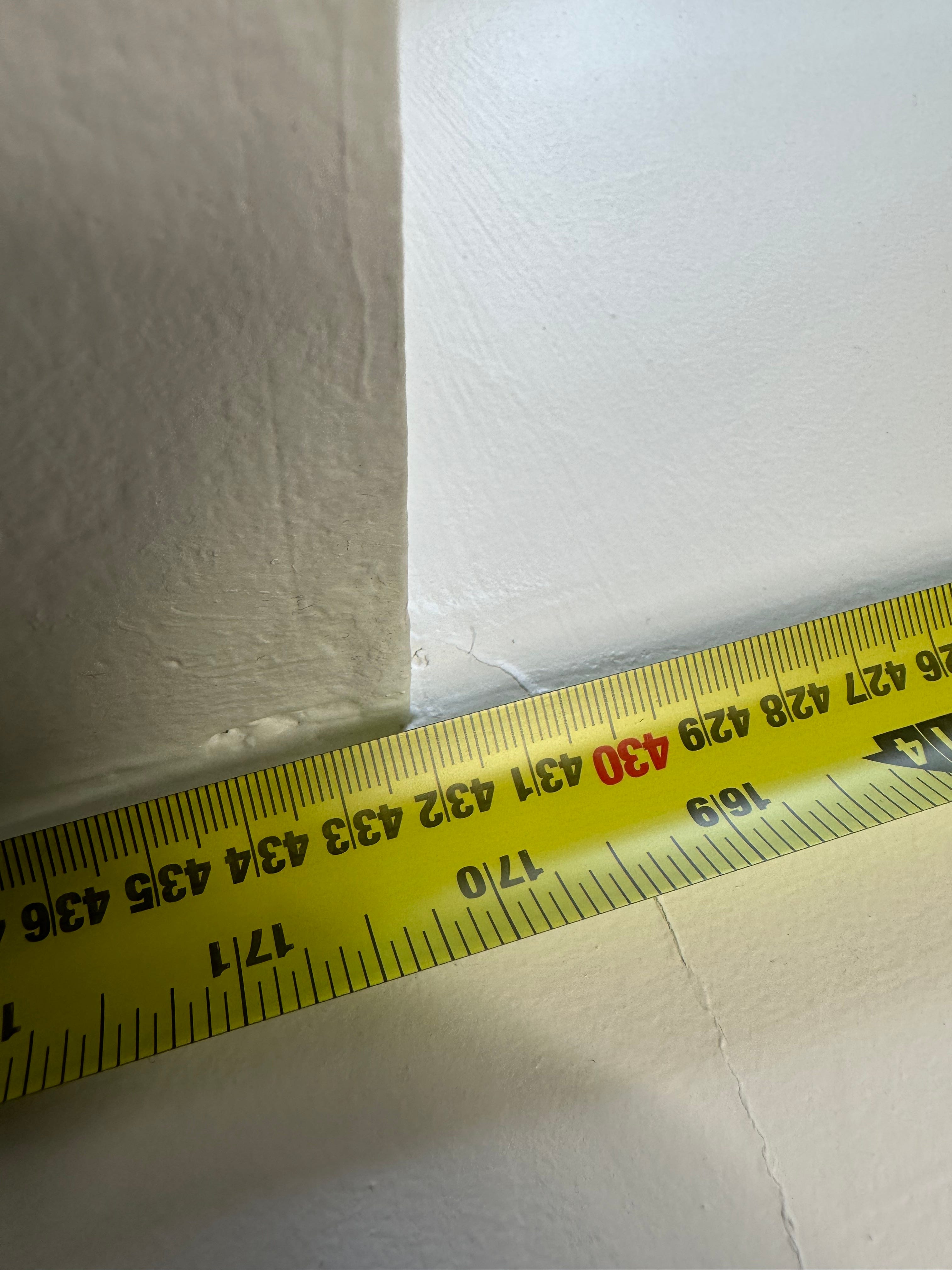

Or at least LiDAR is not perfectly accurate in the context of the simplification and modelling system. One potential use for these scans is to get accurate information about a space — people sharing information about a home almost never know the real floor space or the length of a wall, and it time consuming and error prone to capture this information with measuring tools. While images are all relative sizes this scanning process, particularly with LiDAR does surface real distances into the model. However, over about 4.5 meters the scan was off about 5%

Given how the accuracy LiDAR seems to be able to achieve (measuring a large cliff face to +/- 10 cm) it seems likely that this is partially related to the simplification process applied. One possible source of difference here is some soundproofing wall panels that extend into the room and might have been been inferred as the plane of the wall. The result is that this seems to be accurate for coarse design and visual experience of walking through the space, but not accurate enough to, for instance, buy new windows without an additional measurement process.

Observation 4: Unity and VR development has a lot of open space

The tool chain for working with VR in unity seems stable, the examples comprehensive. And there seems like a ton of opportunity in the application space still. I am more bullish than most that VR could take off as a modality, and much of the foundational investment has been made or is being made. But like the dawn of mobile technology which cultivated a personal interaction mode and in turn set the stage for social media and more a lot of the impact will be with the applications that arise. Definitely will want to come back to this platform for projects in the future.

So What …

The scanning from ARKit is sophisticated enough to produce a nicely simplified model. Seems interesting for direct use in modelling a space for renovation or exploration, but also set up well to do interesting post processing to build out full spaces like virtual tours — but more focused on a fully explorable space than the systems that feel like panoramic photos. There is also interesting secondary data like sizing for accurate information about the space, and enough information to build ML models assessing the space.

What Next …

Possible next directions for this spike would be:

- to try to access the camera data directly to capture textures to apply to the structural model surfaced

- to try post processing multiple room scans to build up complete spaces

- to try to access lower level data (Dense depth map) to explore measurement accuracy